New Evidence Challenges AI Origin of Gaza Protest Video

A recent video circulating on social media from southern Gaza has ignited a vigorous debate over whether it was artificially generated using advanced artificial intelligence (AI) technology. The footage, which surfaced on platforms like X (formerly Twitter) earlier this week, depicts a masked individual making a heart gesture and a “shaka” sign amidst a large crowd of Palestinians gathered along fences at the Tal as Sultan aid distribution site in Rafah.

Authenticity Under Scrutiny: Is the Video AI-Generated?

Initial speculation suggested the possibility of AI manipulation, prompting experts and cybersecurity firms to analyze the footage. However, a joint investigation by NBC News and Get Exact Safety, a cybersecurity organization specializing in detecting AI-generated content, found no evidence to support claims that the video was artificially created or altered. Their thorough analysis confirmed the video’s authenticity, indicating it was not produced by generative AI.

Geolocation and Contextual Verification

Using geospatial data, NBC News pinpointed the location of the video to the recently established aid distribution center at Tal as Sultan, constructed by Israel’s civilian security agency, the Coordinator of Government Activities in the Territories (COGAT), in collaboration with the Gaza-based Humanitarian Relief Foundation (GHF). The foundation’s spokesperson verified that the footage was initially shared by their team but was unable to confirm the identity of the individual in the clip.

In a statement, the GHF emphasized the importance of accuracy, asserting, “Any claims that our footage is fabricated or AI-generated are false and irresponsible.” This underscores the foundation’s commitment to providing genuine documentation of ongoing humanitarian efforts.

Satellite and Drone Evidence Reinforce Video’s Authenticity

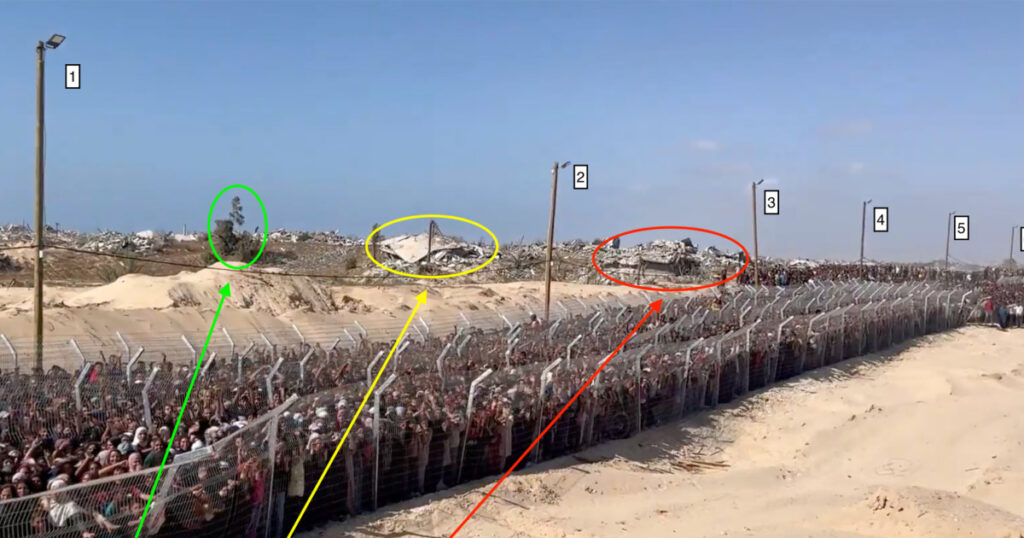

Satellite images from Planet Labs, alongside drone footage captured by the Israeli military, corroborate the physical features visible in the video. Both sources reveal the same row of light poles and fencing, with additional signs of recent destruction and vegetation behind the barriers, consistent with the scene depicted in the footage. These details, including collapsed structures and overgrown areas near the Mediterranean coast, align precisely with the visual evidence.

Public Skepticism and Expert Analysis

Following the video’s release, social media users expressed skepticism about its authenticity. Comments ranged from outright accusations of AI fabrication to doubts about the footage’s credibility. One user remarked, “This looks like AI-generated content; it’s hard to believe anything without verification.”

Hany Farid, a renowned digital forensics expert and co-founder of Get Exact Safety, analyzed the footage for NBC News. He noted the absence of typical AI artifacts and highlighted the continuity of visual details, such as the sharp “Ray Ban” logo on the sunglasses worn by the individual, which AI often struggles to replicate accurately. Farid explained, “The camera’s smooth panning and consistent shadows suggest this is a genuine recording, not a synthetic creation.”

Technical Clues Point Toward Authenticity

Further analysis revealed that the individual in the video was wearing Oakley S.I. gloves, a brand frequently used by U.S. contractors operating in Gaza, with sightings dating back to early 2023. This detail, along with the natural soundscape-including wind noise and English commentary-supports the video’s authenticity. The audio matches the visual cues, further reducing the likelihood of AI fabrication.

Broader Context: The Rise of AI-Generated Content in Conflict Zones

The emergence of AI-generated videos related to Gaza is part of a broader trend. In February, former President Donald Trump shared a fabricated video on his social platform depicting Elon Musk throwing money and Trump swimming with Israeli Prime Minister Benjamin Netanyahu. Such instances highlight the growing challenge of verifying visual content amid misinformation campaigns.

Experts warn that AI’s dual nature presents both opportunities and risks. While it can be used to produce convincing fake videos that distort reality, it also complicates efforts to discern truth from falsehood. Hany Farid emphasized, “Generative AI is a double-edged sword-it can spread misinformation and cast doubt on genuine events, making it harder for the public to trust visual evidence.”

Conclusion: The Importance of Verification in the Digital Age

This case underscores the critical need for rigorous verification methods when assessing visual content, especially in conflict zones where misinformation can have serious consequences. The combination of geospatial data, satellite imagery, and expert analysis provides a robust framework for establishing authenticity. As AI technology advances, ongoing vigilance and technological safeguards will be essential to maintain trust in digital media.

Authors:

Marin Scott, Associate Reporter, Social Newsgathering Team

Colin Sheeley, Senior Reporter, NBC News, New York

Tavleen Tarrant contributed to this report.